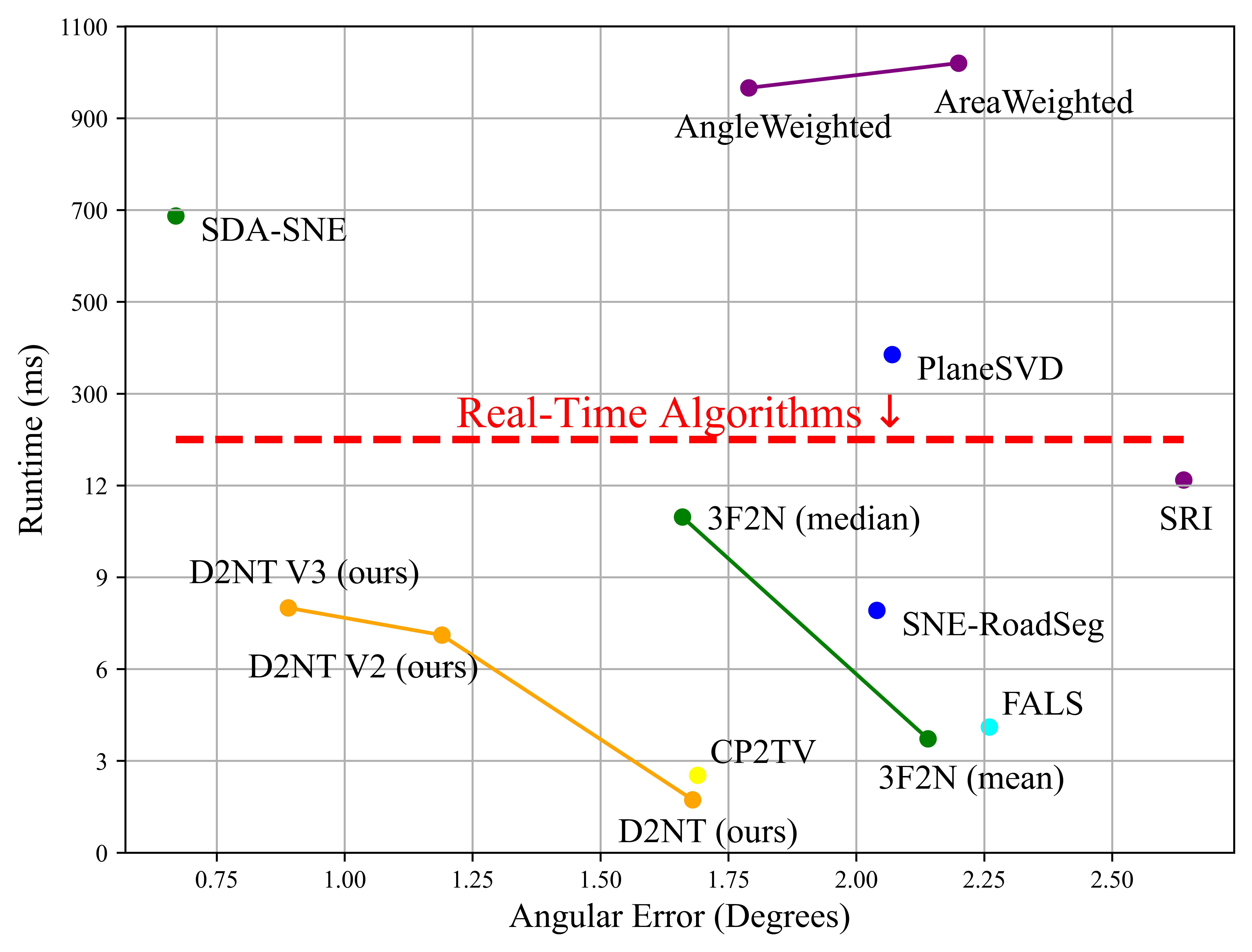

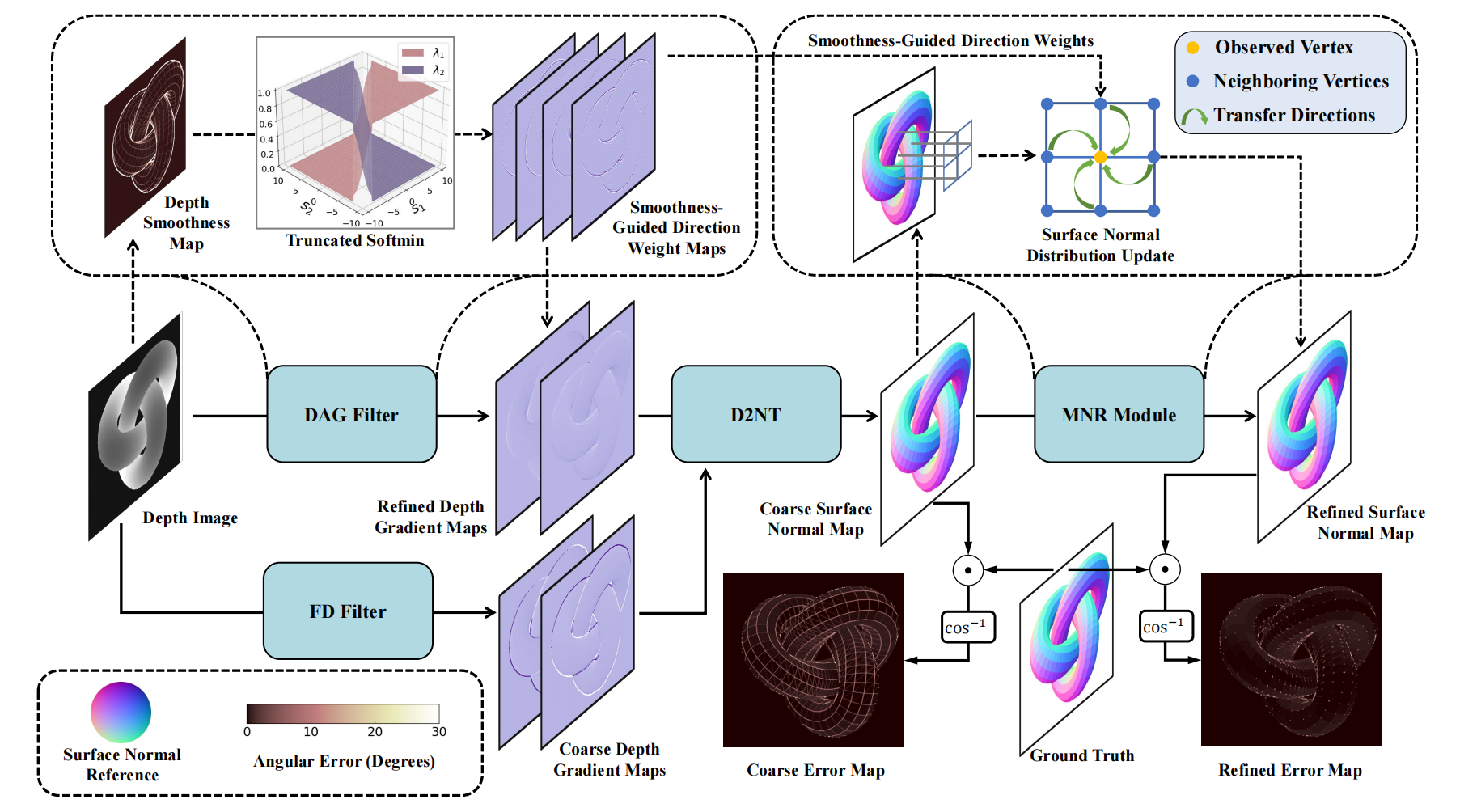

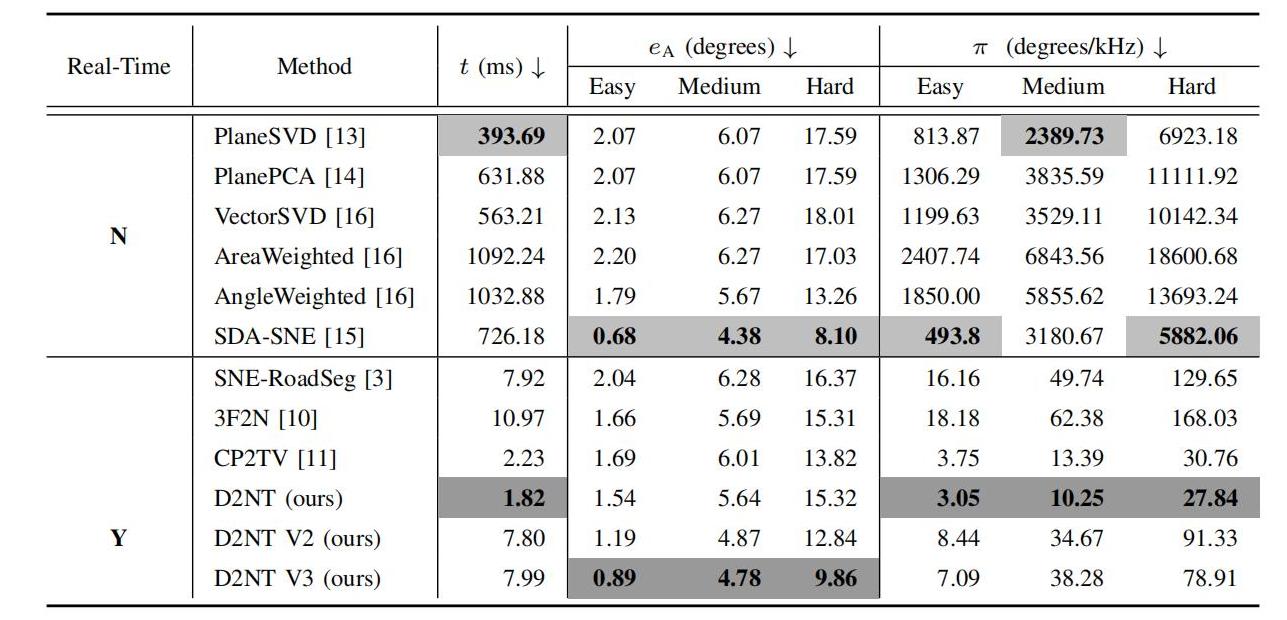

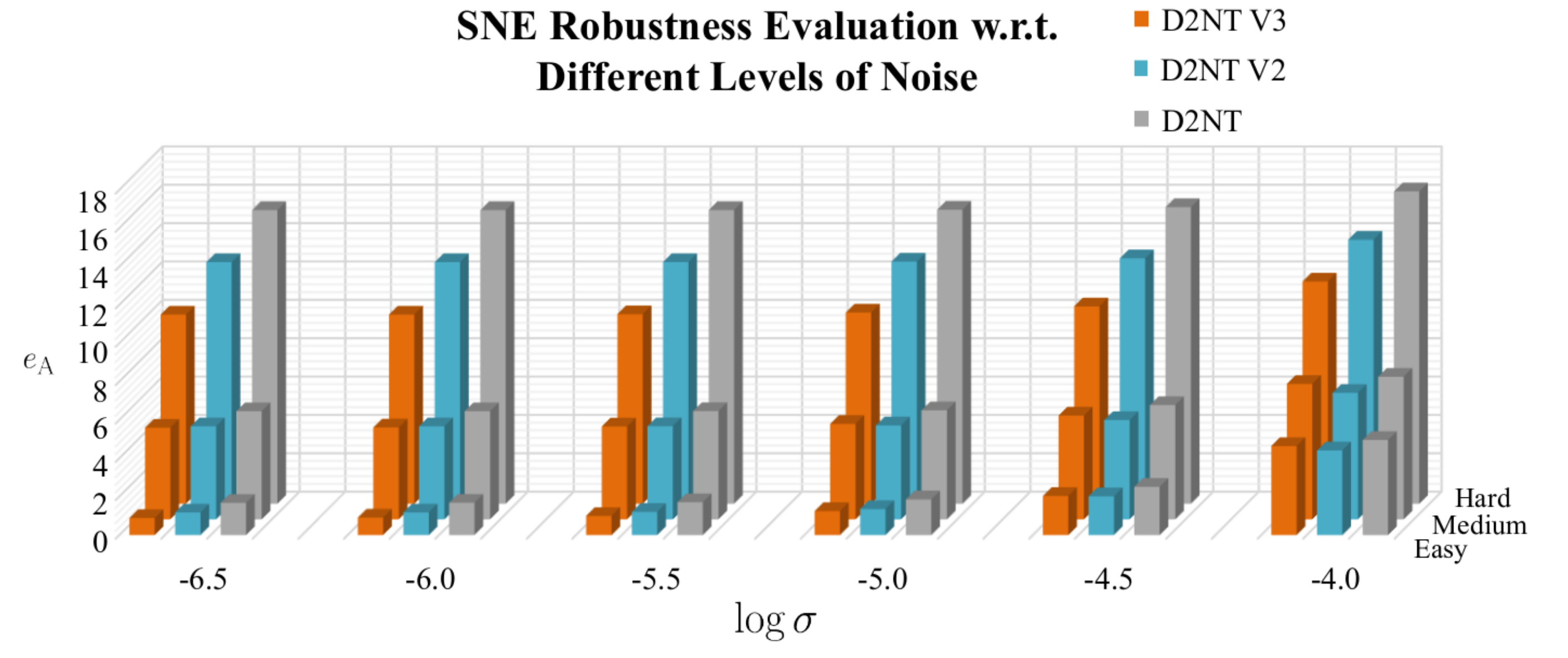

Surface normal holds significant importance in visual environmental perception, serving as a source of rich geometric information. However, the state-of-the-art surface normal estimators (SNEs) generally suffer from an unsatisfactory trade-off between efficiency and accuracy. To resolve this dilemma, this paper first presents a superfast depth-to-normal translator (D2NT), which can directly translate depth images into surface normal maps without calculating 3D coordinates. We then propose a discontinuity-aware gradient filter, which adaptively generates gradient convolution kernels to improve depth gradient estimation. Finally, we propose a surface normal refinement module that can easily be integrated into any depth-to-normal SNEs, substantially improving the surface normal estimation accuracy. Our proposed algorithm demonstrates the best accuracy among all other existing real-time SNEs and achieves the SoTA trade-off between efficiency and accuracy.

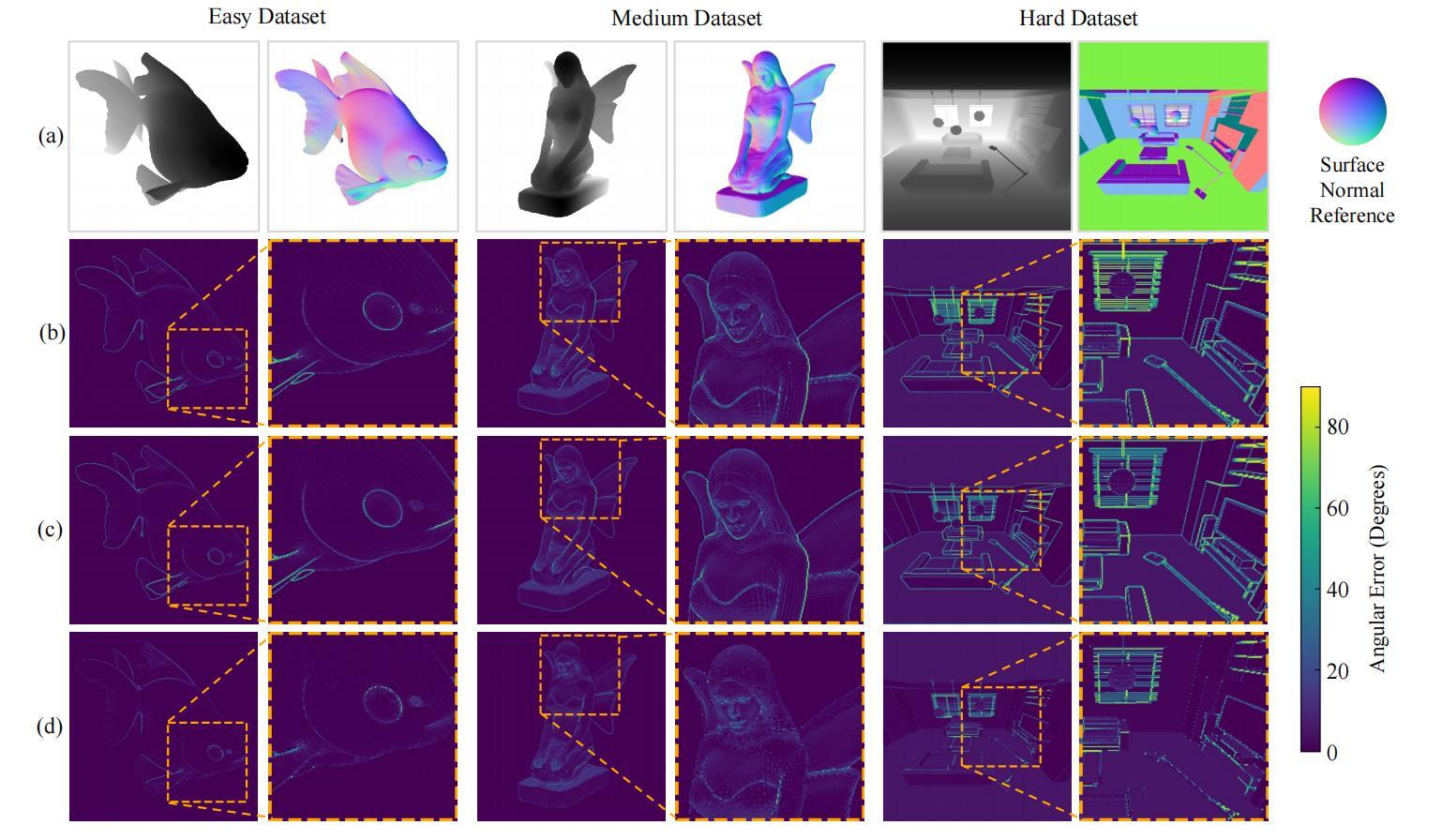

The illustration of our proposed D2NT, DAG filter, and MNR module. D2NT translates depth images into surface normal maps in an end-to-end fashion; DAG filter adaptively generates smoothness-guided direction weights for improved depth gradient estimation in and around discontinuities; MNR module further refines the estimated surface normals based on the smoothness of neighboring pixels.

@inproceedings{feng2023d2nt,

title={D2nt: A high-performing depth-to-normal translator},

author={Feng, Yi and Xue, Bohuan and Liu, Ming and Chen, Qijun and Fan, Rui},

booktitle={2023 IEEE international conference on robotics and automation (ICRA)},

pages={12360--12366},

year={2023},

organization={IEEE}

}