|

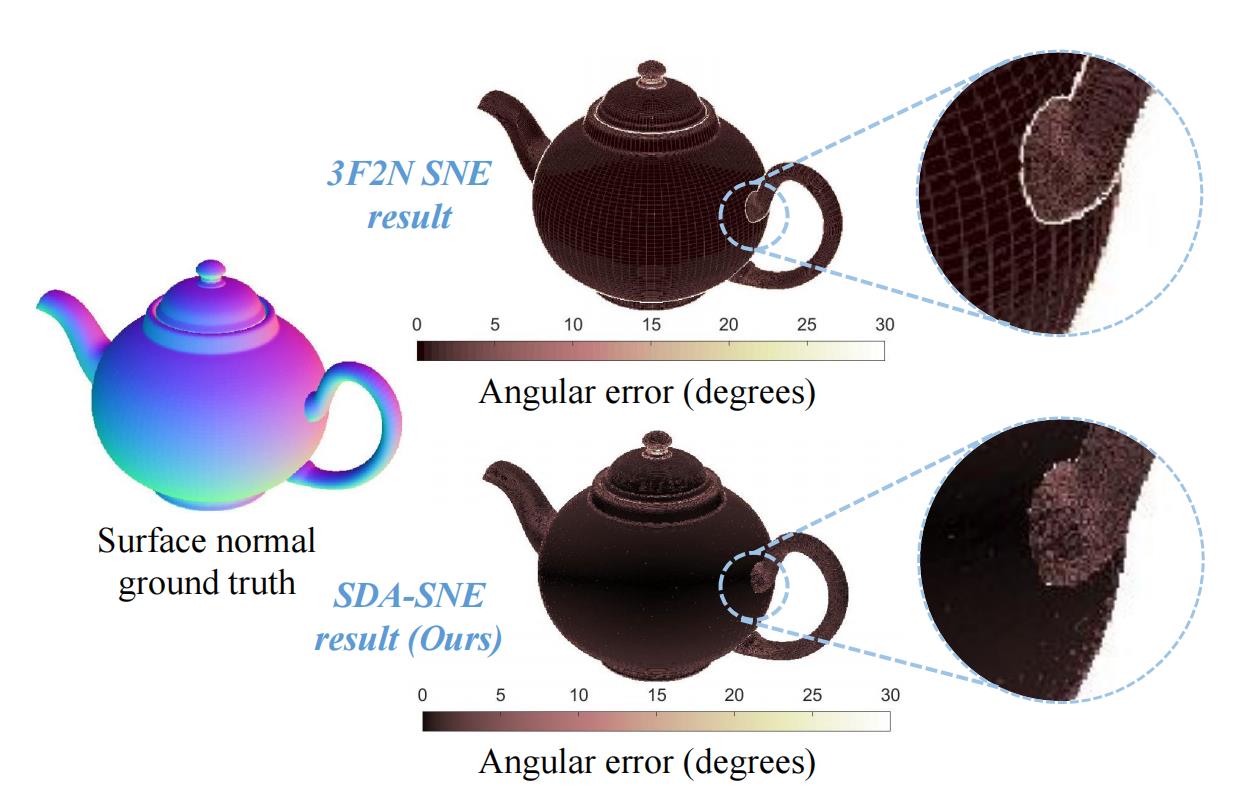

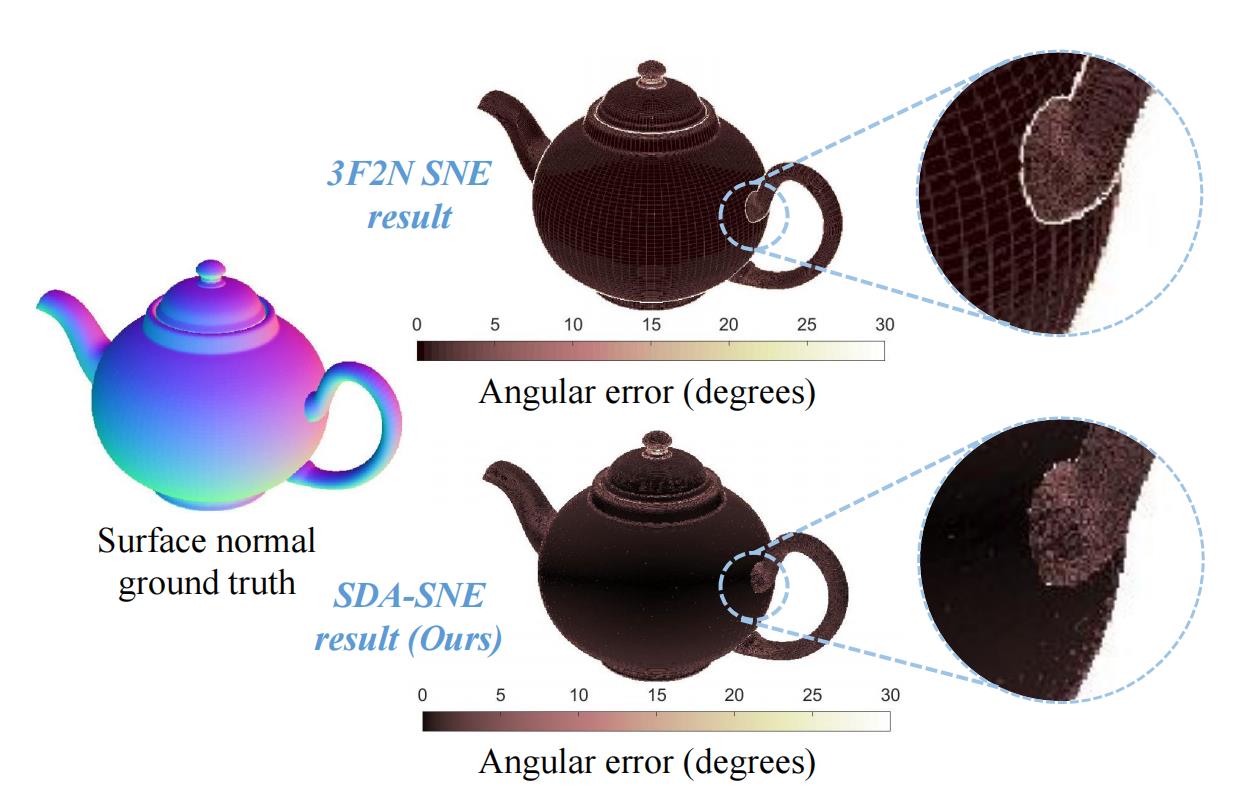

The state-of-the-art (SoTA) surface normal estimators (SNEs) generally translate depth images into surface

normal maps in an end-to-end fashion. Although such SNEs have greatly minimized the trade-off between

efficiency and accuracy, their performance on spatial discontinuities, e.g., edges and ridges, is

still unsatisfactory. To address this issue, this paper first introduces a novel multi-directional dynamic

programming strategy to adaptively determine inliers (co-planar 3D points) by minimizing a (path) smoothness

energy. The depth gradients can then be refined iteratively using a novel recursive polynomial interpolation

algorithm, which helps yield more reasonable surface normals. Our introduced spatial discontinuity-aware

(SDA) depth gradient refinement strategy is compatible with any depth-to-normal SNEs. Our proposed SDA-SNE

achieves much greater performance than all other SoTA approaches, especially near/on spatial

discontinuities. We further evaluate the performance of SDA-SNE with respect to different iterations, and

the results suggest that it converges fast after only a few iterations. This ensures its high efficiency in

various robotics and computer vision applications requiring real-time performance. Additional experiments on

the datasets with different extents of random noise further validate our SDA-SNE's robustness and

environmental adaptability. Our source code, demo video, and supplementary material are publicly available

at https://mias.group/SDA-SNE/.

|